Autonomous vehicles use sensors to observe their environment. To efficiently validate vehicle functions at an early stage, the environment, sensors, and vehicle must be realistically simulated and tested in virtual driving tests. For this purpose, dSPACE offers an integrated tool chain comprised of powerful hardware and software.

There is no longer any doubt as to whether autonomous vehicles will become part of road traffic. The real question is when this is expected to happen. Level 4 vehicles that are ready for series production are predicted for 2020/21. To reach this goal, validating the driving functions while they are still in development is crucial. Developers must therefore be able to simulate driving functions in combination with environment sensors (camera, lidar, radar, etc.) in the laboratory in quasi-real traffic scenarios. The alternative to this would involve conducting real test drives on the road, a practice which is not feasible simply because it would require driving many millions of kilometers in real vehicles to cover all mandatory scenarios.

Conditions for the Validation Process

A number of conditions are important when validating functions for autonomous driving:

- Functions for autonomous and highly automated driving are extremely complex, partly because the measured values from numerous (sometimes more than 40) different sensors (camera, lidar, radar, etc.) must be taken into account simultaneously.

- The variety of traffic scenarios to be tested (vehicles, pedestrians, traffic signs, etc.) is almost unlimited, which means that testing requires complex virtual 3-D scenes.

- The physical processes involved in emitting or capturing light pulses, microwaves, etc. must be integrated as physical environment and sensor models, which are rather computation-intensive. This also includes effects of the object material properties, such as permittivity and roughness.

- Many millions of kilometers have to be covered during testing to ensure compliance with the ISO 26262 standard (Road vehicles – Functional safety). Moreover, particular attention must be paid to critical driving situations to make sure the correct types of kilometers are being driven as required.

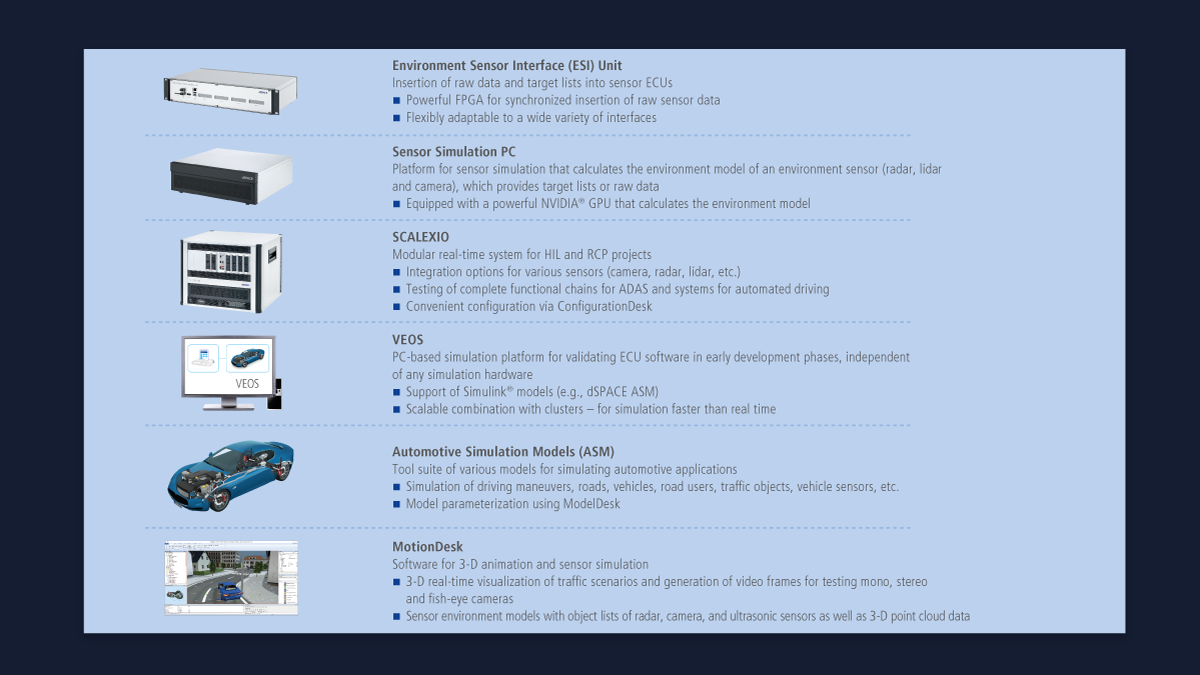

dSPACE provides a powerful tool chain consisting of hardware and software that takes all of the prerequisites for sensor simulation into account throughout the entire development process. This helps developers identify errors at an early stage and contributes to the design of an extremely efficient test process.

Simulation is Mandatory

To meet the requirements of the validation process, the driving functions must be verified and validated at all stages of the development process. Simulation is the most efficient method to do this. As functions for autonomous driving are highly complex, it is essential that the related test cases are reproducable and reusable at all stages of the development process, from model-in-the-loop (MIL) to software-in-the-loop (SIL) to hardware-in-the-loop (HIL), across all platforms. This can be achieved only with an integrated tool chain.

The dSPACE tool chain supports high-precision sensor simulation throughout the entire development process.

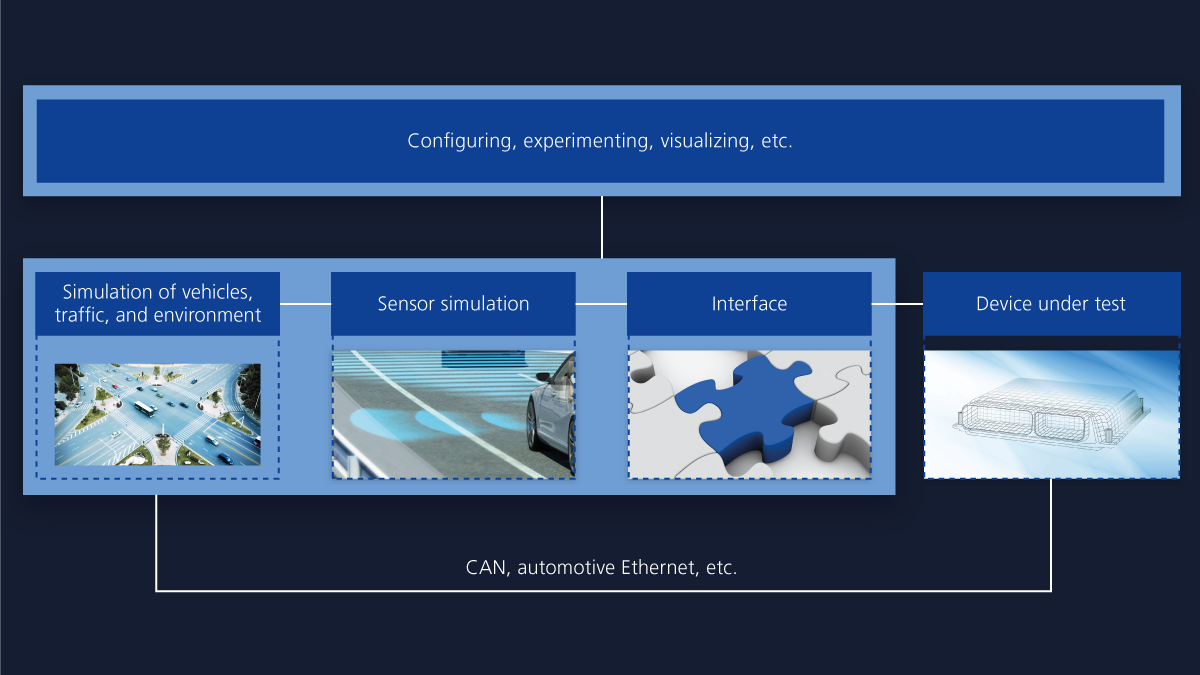

Structure of the Simulation Environment

The structure of a closed-loop simulation is identical for MIL/SIL and HIL (figure 1). The simulation essentially covers the vehicle, traffic, and environment as well as sensor simulation. An interface is used for connecting the sensor simulation to the device under test (control unit or function software for autonomous driving). Furthermore, the user must have an option for configuring simulation models, conducting experiments, and visualizing scenes, for example. Support for common interfaces and standards, such as FMI, XIL-API, OpenDrive, OpenCRG, OpenScenario, and Open Sensor Interface, also plays an important role, because it helps integrate data from the German In-Depth Accident Study (GIDAS) accident database or traffic simulation tools as co-simulation.

Simulation of Vehicles, Traffic, and Environment

The basis for the sensor simulation is a traffic simulation in which different road users interact with one another. For this, dSPACE offers the Automotive Simulation Models (ASM) tool suite, which makes it possible to define virtual test drives in virtual environments. The ASM Traffic model calculates the movement of road users and can therefore simulate passing maneuvers, lane changes, traffic on intersections, etc. Sensor models play a decisive role in the interactions between the vehicle and the virtual environment.

Structure and Functionality of Sensors

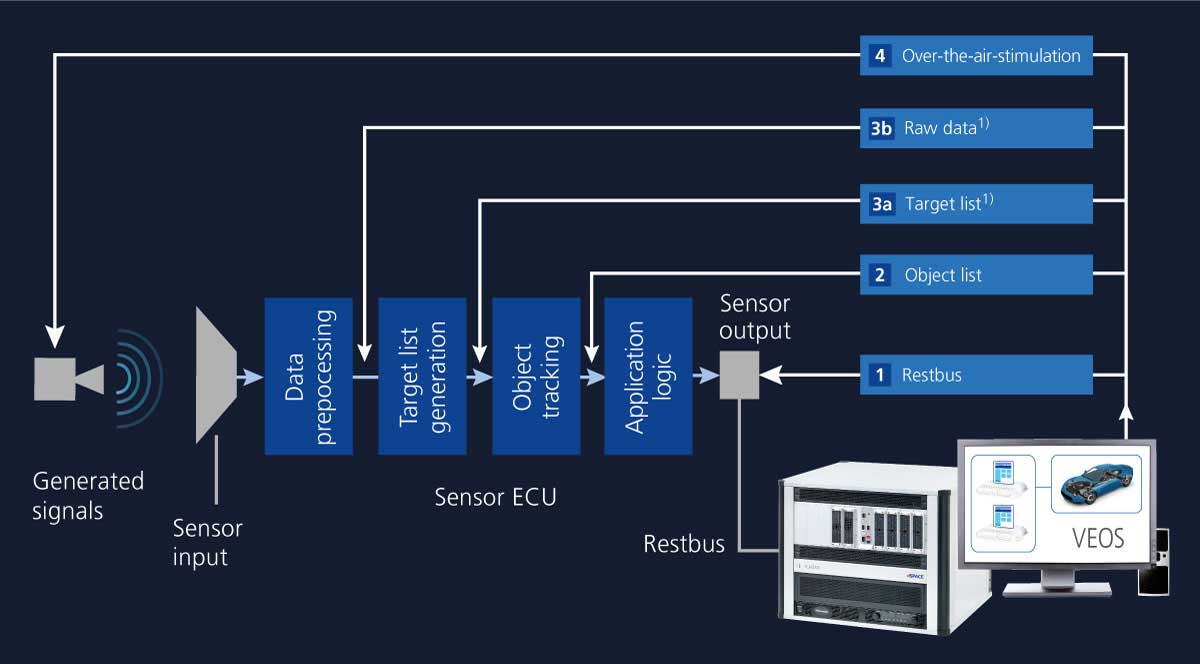

The aim of using sensors is to detect objects by first using sensor raw data to determine targets. The data can include a local accumulation of recorded reflection points at a constant relative velocity, for example. In the next step, classified objects (cars, pedestrians, traffic signs, etc.) are identified from the characteristic arrangement of these points. Camera, radar, and lidar sensors are very similar in their basic design: They consist of a front end which usually preprocesses the data. In a subsequent step, a data processing unit generates a raw data stream and outputs a target list. Next, another unit generates the object list and thus supplies the position data. This is followed by the application logic and network management.

Integration Options for the Sensors Used in Sensor Simulation

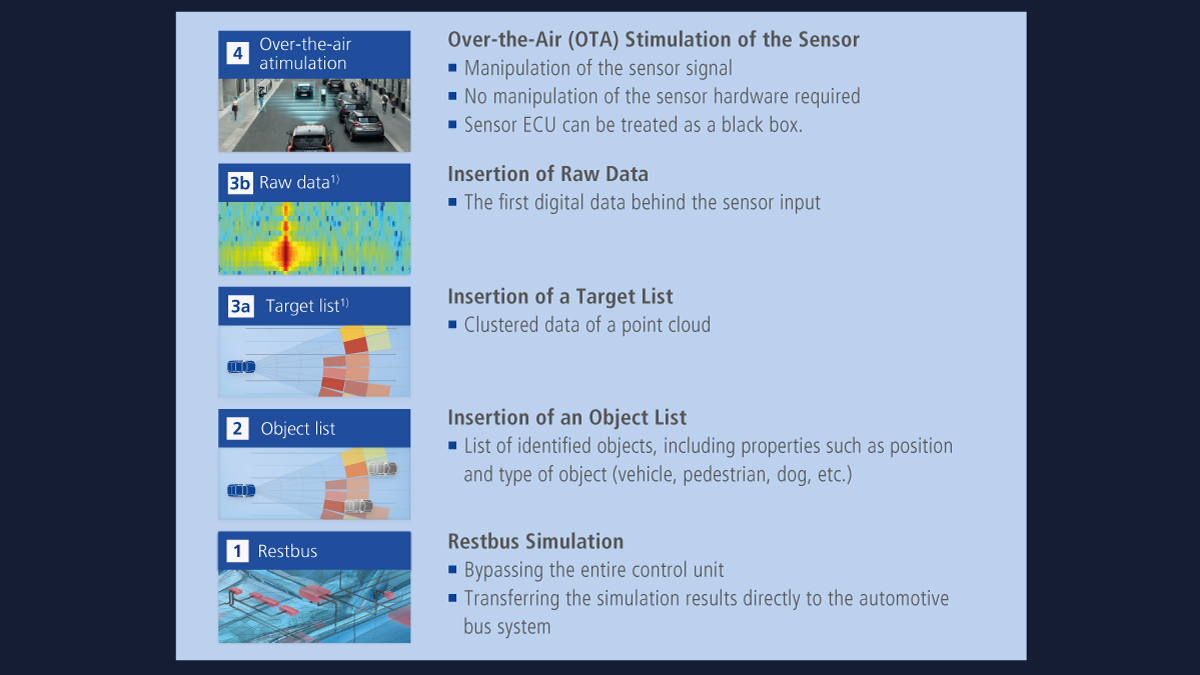

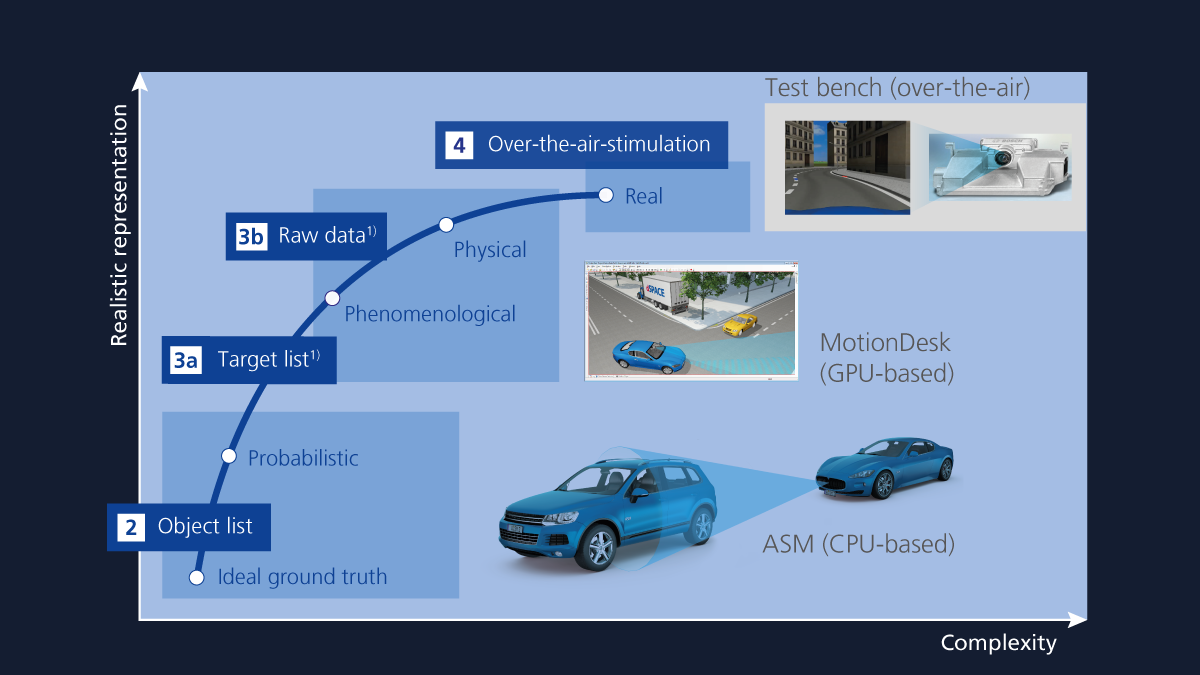

The similar structures of the different sensor types facilitate the integration of sensors in the simulation, and suitably prepared simulation data can be inserted into the individual data processing units as required (figure 4). The appropriate integration option depends on how complete and realistic the properties of the sensor must be in the simulation and which part of the sensor is to be tested. Over-the-air (OTA) stimulation is an approach for feeding an environment simulation into a camera-based control unit (option 4), for example. The camera sensor captures the image of a monitor which displays the animated surrounding scenery. This approach allows for testing the entire processing chain, including the camera lens and the image sensor. Digital sensor data (option 3) can be divided into raw sensor data, i.e., data returned directly after data preprocessing (option 3b), and target lists (option 3a). For the camera sensor, for example, this is the image data stream (raw data) or a detected target (target list). The next level is object lists that contain classified targets and transaction data (option 2). Restbus simulation is used for sensor-independent tests (option 1). Simulation by means of object and target lists as well as raw data is an application scenario for both HIL and SIL simulation, while the OTA stimulation and the restbus simulation are exclusively HIL application cases.

Physical/Phenomenological Models

dSPACE has developed highly precise physical sensor environment models for simulating camera, radar, and lidar sensors, which generally provide raw data or target lists. They are designed for calculations on graphics processing units (GPUs).

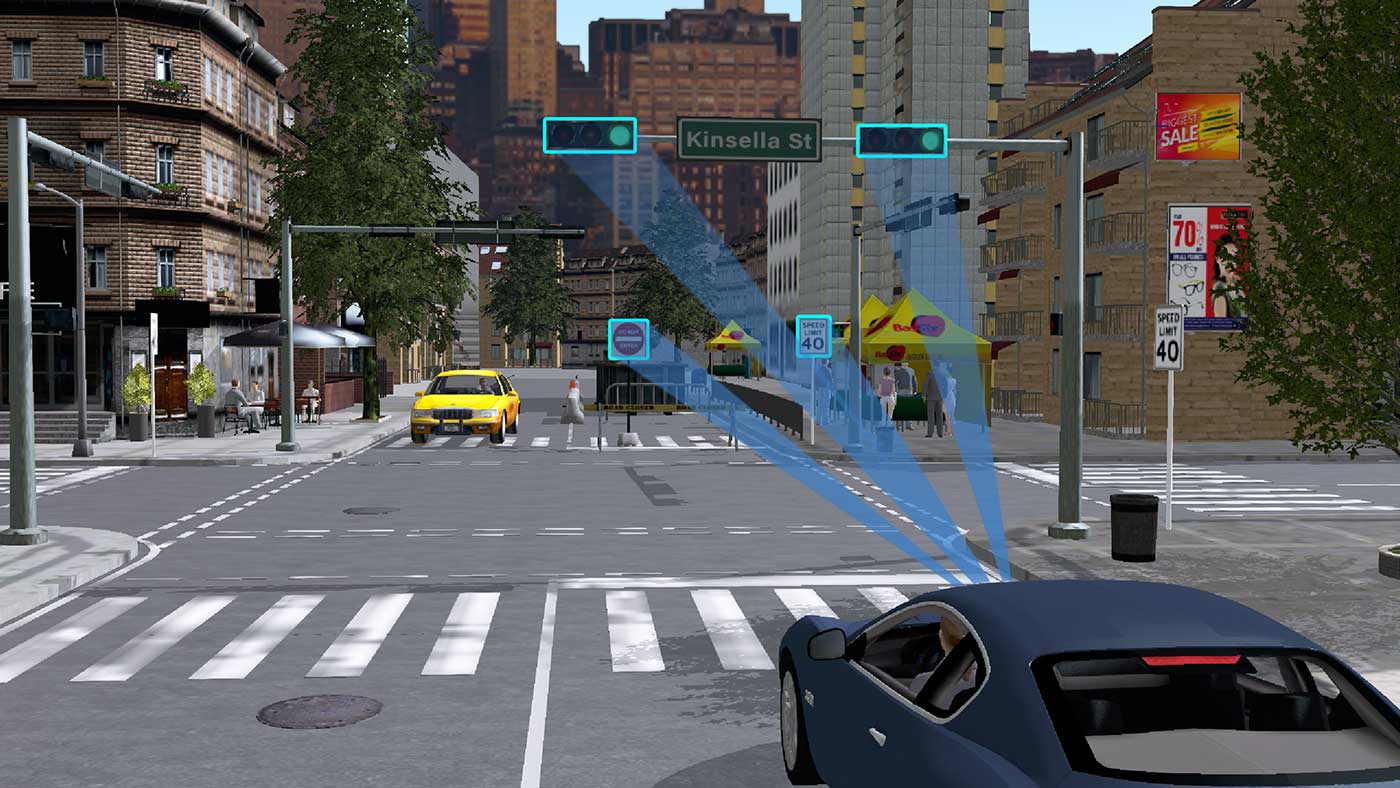

Camera Model

The validation of functions for camera-based assistance and automated driving essentially requires that different lens types as well as optical effects such as chromatic aberration or vignetting on the lens can be taken into account. Simulating different numbers of image sensors (mono/stereo camera) or multiple cameras for a panoramic view must also be an option. Furthermore, sensor characteristics, color (monochromatic representation, Bayer pattern, HDR, etc.), pixel errors, and image noise, play an important role for the validation.

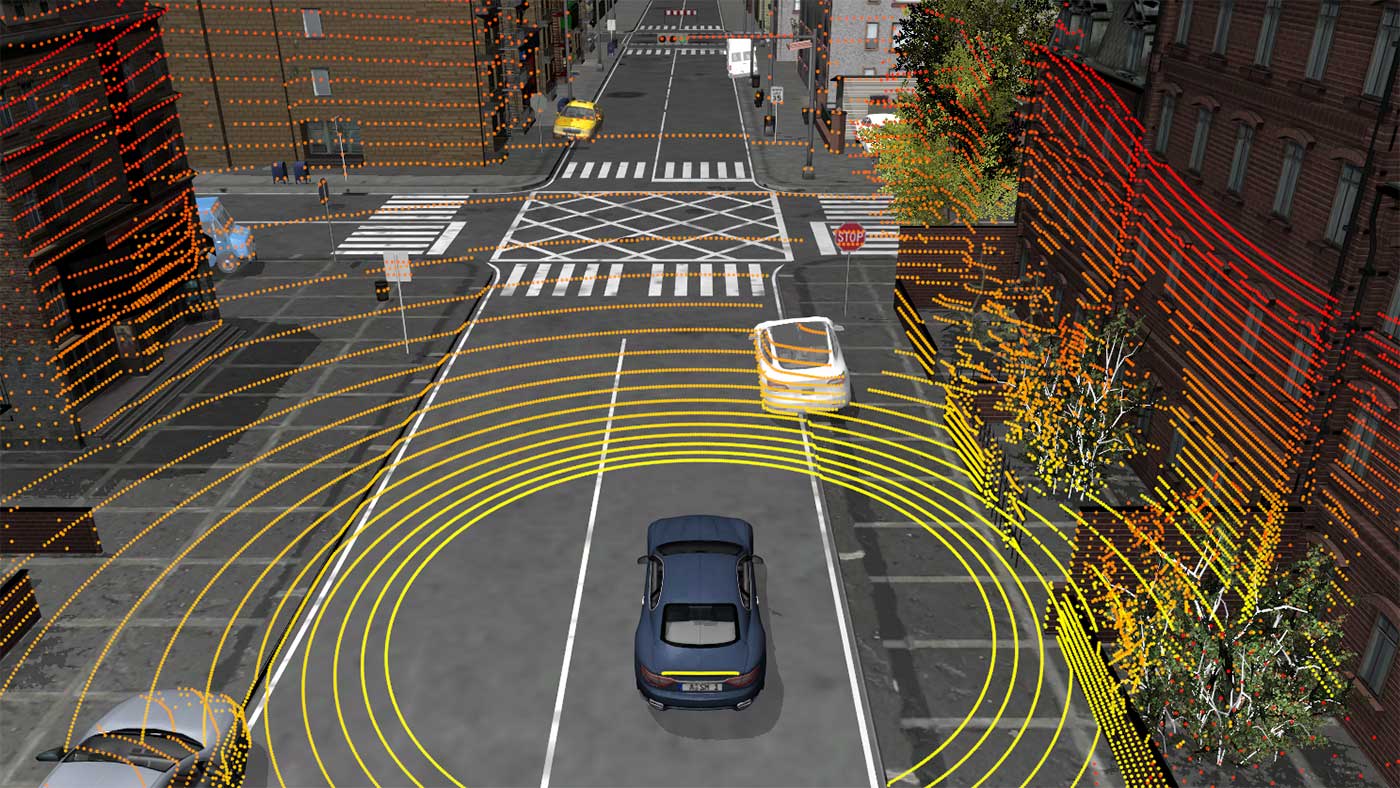

Lidar Model

Lidar systems emit laser pulses and measure the light reflected from an object. The distance of the object can then be calculated from the elapsed time. In addition to the distance, the system also determines the intensity of the reflected light, which depends on the surface condition of the object. This method of measurement allows for describing the environment in the form of a point cloud, i.e., the data is available as a target list that includes information on distance and intensity. Furthermore, in the lidar model it is essential that the specific operating modes of a sensor, including angular resolution, can be configured for the light waves, and that the objects used in the 3-D scene have surface properties, such as reflectivity. It must also be taken into consideration that the light is scattered differently by rain, snow, or fog and that a single light beam can be reflected by several objects. This results in an amplitude distribution across the sensor surface, which in turn depends on the environment (moving objects, immobile objects, etc.) or the time. The lidar model supports approaches ranging from point clouds to raw data.

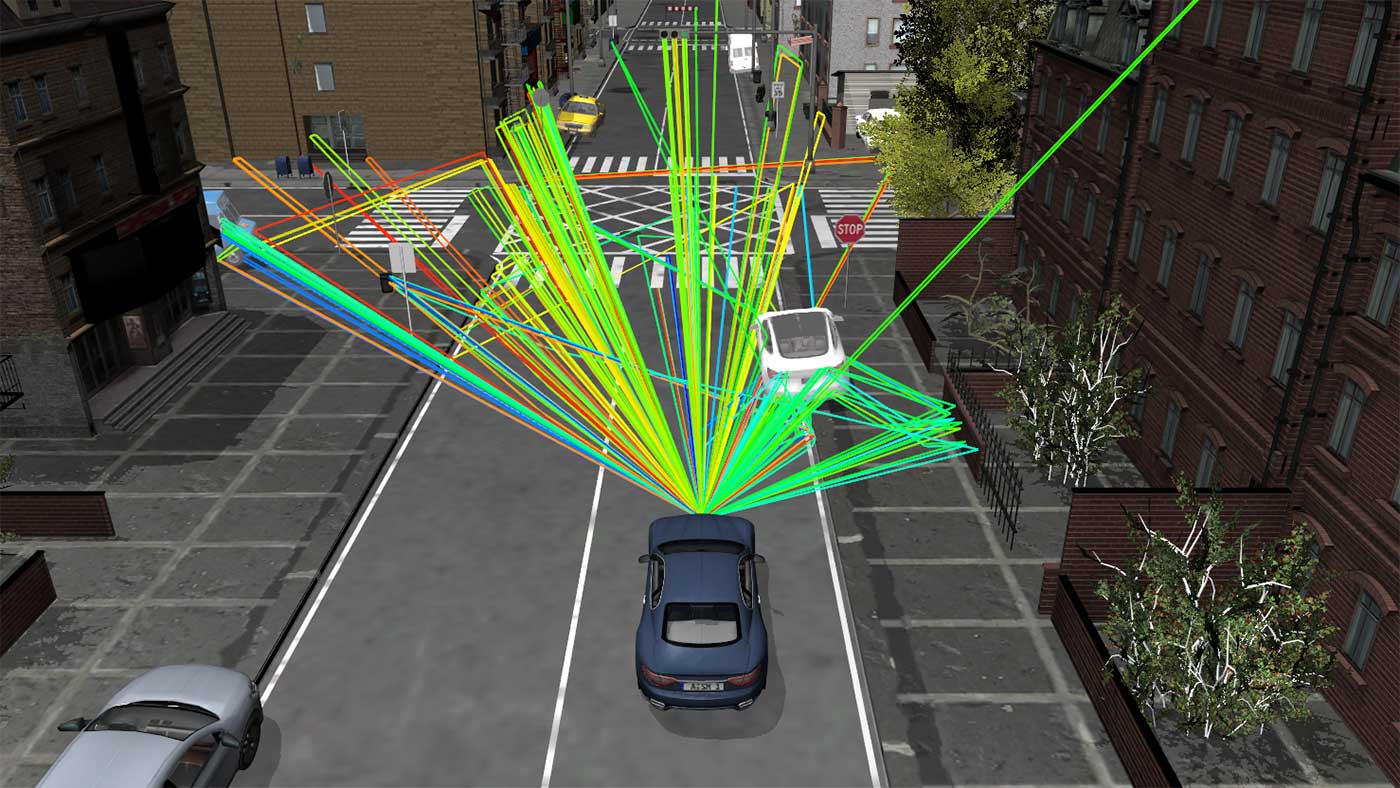

Radar Environment Model

Radar plays an important role in ADAS/AD driving functions, because it is robust in adverse weather conditions such as rain, snow, and fog as well as in extreme lighting conditions. The radar sensor can not only determine the type of objects, i.e., vehicles, pedestrians, cyclists, etc., but also measure their distance, the vertical and horizontal angles as well as the relative and absolute speed. Potentially one of the most important radar technologies is the frequency-modulated continuous wave radar (FMCW, also known as continuous wave radar), which uses frequency-modulated signals. Modern radar systems transmit about 128 frequency-modulated signals in one measuring cycle. The radar detects the signal reflected by the object – also called the echo signal. The frequency change and the generated signal can help measure the distance of the object. The speed of the object is determined by means of the Doppler frequency. The radar model from dSPACE realistically reproduces the behavior of the sensor path. For example, modeling factors in multipath propagation, reflections, and scattering.

Types of Sensor Modeling

Each of the different sensor integration options requires a sensor model that provides suitably processed data. Essentially, the models for the sensor simulation can be classified according to how complex and close to reality they are (figure 3). Two types of models return object or target lists: ground truth models based on real reference data and probabilistic models based on the probability of events or states. The raw data is generally supplied by phenomenological models, i.e., based on the parameters of events and states, or by physical models, i.e., based on mathematically formulated laws. Depending on the application and time of the development, the driving functions to be developed can be validated by suitable models.

Ground Truth and Probabilistic Models

The ASM Traffic simulation model contains a large number of sensors for performing tests at object list level in a SIL or HIL case:

- Radar sensor 3-D

- Object sensor 2-D/3-D

- Custom sensor

- Road sign sensor

- Lane marking sensor

These sensor models are designed for CPU-based simulation with the VEOS or SCALEXIO platform. Based on an object list, probabilistic models can superimpose realistic effects, such as environmental conditions for fog and rain, as well as multiple targets per detected object. All of this can be used to emulate the radar characteristics and calculate a target list.

Validation via Software-in-the-Loop Simulation

By using software-in-the-loop (SIL)simulation, the software of a sensor-based control unit can be validated virtually with the aid of standard PC technology. The simulation allows for testing the algorithms at an early stage when hardware prototypes are not yet available. In addition, VEOS (figure 1) and the corresponding models allow for calculation faster than in real time. The additional option of performing the calculations on scalable PC clusters increases the speed even more. This makes it possible to manage the considerable variety and number of test cases, and also to perform tests that require covering millions of test kilometers in a timely manner. In a SIL simulation, the vehicle, environment and traffic simulations as well as virtual control units, if applicable, are calculated with the aid of VEOS. The models are also integrated into VEOS, if a simulation is based on ground truth and probabilistic models. Whenever phenomenological or physical sensor models are used, an application for sensor simulation (camera, radar, lidar), which is calculated on a GPU, runs on the same platform as VEOS. The motion data of the environment simulation is transferred to the sensor simulation, which then calculates the models for radar, lidar, or camera sensors. This means that the raw data is simulated using the motion data and the complex 3-D scene with a realistic environment and complex objects, while taking into account the physical characteristics of the sensors. This calculation is performed on a high-performance graphics processor to simulate the ray tracing algorithms for the environment model calculation of radar and lidar, for example. The result of this sensor simulation is then transmitted to the virtual sensor-based control units. This is done via Ethernet or virtual Ethernet. The same technology is used to exchange the virtual sensor-based control units with the vehicle simulation.

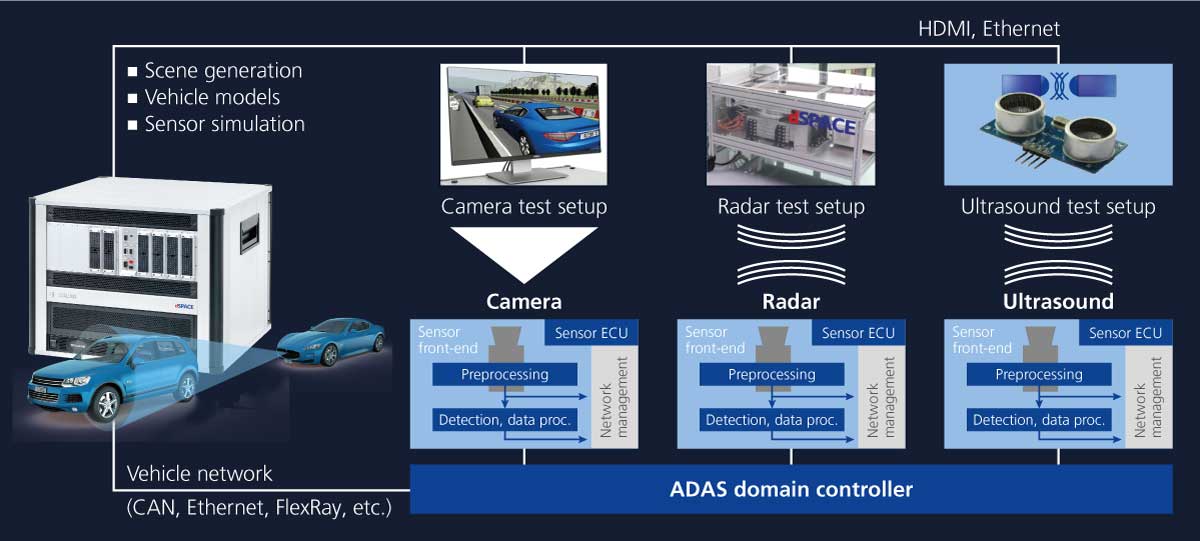

Validation via Hardware-in-the-Loop Simulation

Hardware-in-the-loop (HIL) simulations allow for testing real ECUs in the laboratory by stimulating them with recorded data or artificial test data. In contrast to SIL simulation, HIL simulation makes it possible to investigate the exact time behavior of the control units. The dSPACE SCALEXIO HIL platform performs the traffic, vehicle dynamics, and environment simulation. The vehicle simulation is then connected to the vehicle network, for example, via CAN or Ethernet, to perform a restbus simulation. The motion data of the vehicle and the other objects is sent via Ethernet to a powerful PC with a high-performance graphics processor on which the sensor environment models for camera, lidar and radar are calculated. This data (raw data or target lists) from the various sensors is combined and transmitted to the Environment Sensor Interface (ESI) Unit via a display port. The ESI Unit has a highly modular design to support all relevant protocols and interfaces. The ESI Unit's high-performance FPGA converts the data stream from all sensors into individual data streams for the dedicated sensors and transmits them to the corresponding camera, lidar or radar control units via the various interfaces.

The physical sensor models from dSPACE simulate raw data from camera, lidar and radar sensors with the highest accuracy.

Validation via Over-the-Air-Stimulation

Over-the-air (OTA) stimulation is a classic method of testing sensors. It integrates the complete sensor-based control unit in the control loop (figure 5). This design is ideal for testing the front end of the sensor, such as the lens and image sensor of a camera. For this method, the vehicle, environment, and traffic simulations are calculated with SCALEXIO. If a camera sensor is to be tested, a traffic scene is simulated and displayed on a screen using a SCALEXIO simulator and MotionDesk. For the camera, the virtual scene then represents a real street scene. Radar echoes are directed at the radar sensor in accordance with the driving scenario calculated by the SCALEXIO simulator using the dSPACE Automotive Radar Test System (DARTS). This enables the verification of driving functions such as ACC (adaptive cruise control) or AEB (autonomous emergency braking).

Summary

For autonomous vehicles to move safely in road traffic, the ADAS/AD functions must make the right decisions in all possible driving scenarios. However, due to the virtually unlimited number of possible driving scenarios, testing the required ADAS/AD functions in the laboratory is very complex. These tests can no longer be performed with only one test method. Instead, a combination of different test methods is required. The spectrum includes MIL, SIL, HIL, open-loop, and closed-loop tests as well as real test drives. In light of this complex test and tool landscape, the key to a reliable validation process lies in a flexible and integrated tool chain that offers versatile interfaces and integration options for simulation models and the devices to be tested. This is exactly why the dSPACE tool chain for sensor and environment simulation is so useful and effective: It offers coordinated tools from a single source that interact smoothly with each other to make the validation process very efficient.

dSPACE MAGAZINE, PUBLISHED MAY 2019