dSPACE Sensor Vehicle as an Example of Working with Data-Driven Development

In recent years, data-driven development has become one of the most important methods of software development in various industries. The automotive industry is leading the race, focusing on solving the self-driving car problem, where high-quality data plays a key role. To further develop its tools and provide cutting-edge features for the automotive industry, dSPACE has built an ecosystem for collecting high-quality data. To do this, the company uses its own sensor vehicle, which was developed specifically for this purpose.

The dSPACE Sensor Vehicle

As a key component of the above-mentioned ecosystem, the dSPACE Sensor Vehicle is equipped with the following sensors:

• A high resolution Velodyne Alpha Prime LiDAR with 128 channels.

• 9 cameras with surround and stereo vision with a resolution of 2,880 x 1,860 pixels.

• A 4-D UHD Radar.

• A high-precision GPS sensor for localization with an accuracy of a few centimeters.

Together, the sensors generate about 10 TB of data per hour. The dSPACE AUTERA Autobox data logger in the roof box records all the sensors' data and writes it to the SSD at a transfer rate of about 23 GBit/s. Sensor data acquisition and system management are handled by the RTMaps middleware software, which performs tasks such as synchronizing all the hardware, management of the processes of the system, and recording data.

However, before data collection can begin on the road, some prerequisites, such as sensor calibration, must be performed to enable certain processes along the data-driven development pipeline that follows data collection.

Sensor Calibration Overview: Operating an autonomous vehicle requires calibration of the various sensors so that it can coordinate information received from multiple sensors.

Sensor Calibration: The What and the Why

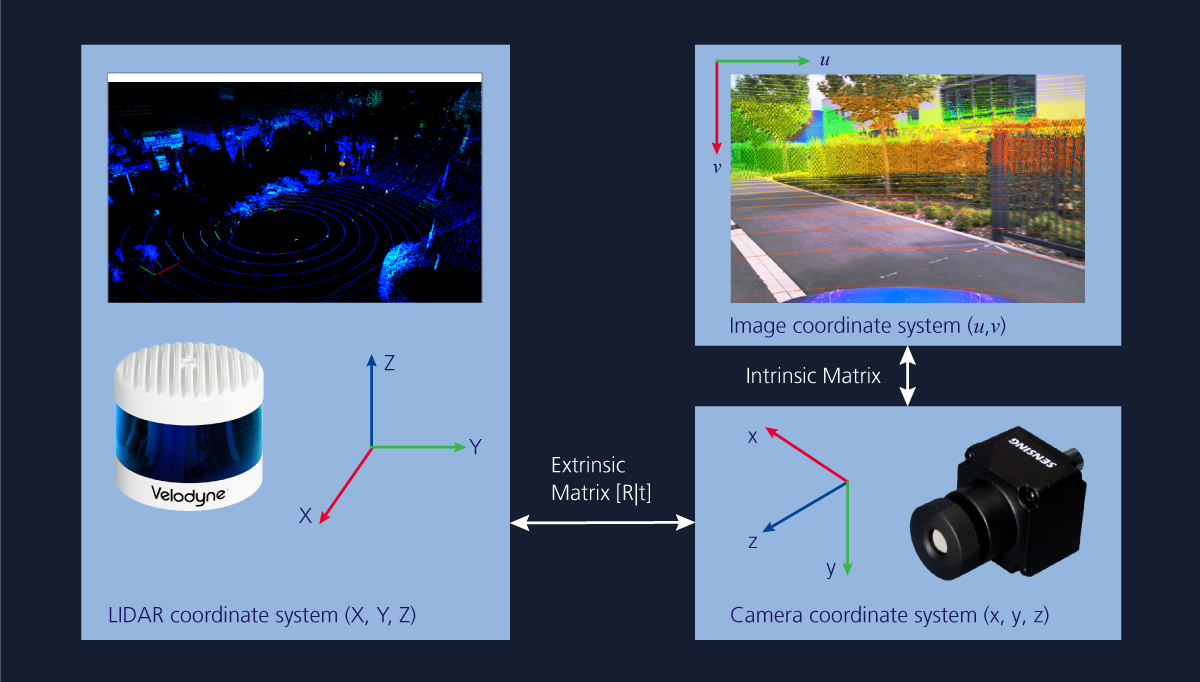

What is sensor calibration? In general, sensor calibration refers to the process of measuring the correspondence between the output of a sensor and the data actually measured by the sensor. However, in the context of data acquisition with a sensor setup, the term sensor calibration refers to the geometric calibration of the sensors. Generally, there are two types of calibration: intrinsic and extrinsic.

Intrinsic calibration refers to the calibration of the sensors to themselves to achieve a geometric match between the measured data and the actual data. Intrinsic camera calibration, also referred to as camera re-sectioning, involves estimating lens and image sensor parameters, for example. The estimated parameters can be used to remove image distortion caused by the lens.

Extrinsic calibration refers to the calibration of one sensor to another, resulting in a geometric match between the data measured by one sensor and the data measured by another sensor. For example, in a sensor setup with a LiDAR and a camera, extrinsic calibration involves estimating the parameters that define the relative positions of the sensors and that can be used to transmit the measurement data from the LiDAR to the camera in the setup.

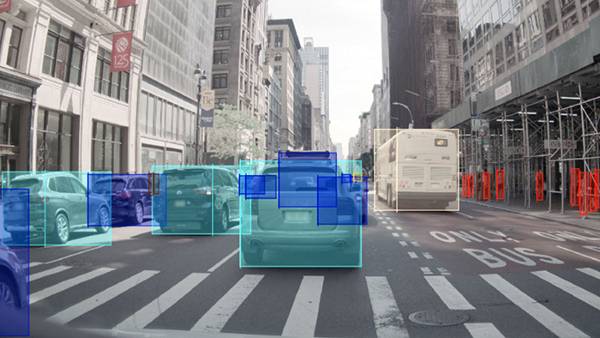

Why do we need sensor calibration? Calibration generally verifies the precision and reproducibility of sensors. Geometric calibration is necessary to transfer information from one sensor to another. Calibration of different sensors in relation to each other is necessary for the operation of an autonomous vehicle so that it can coordinate information received from multiple sensors. For example, many 3-D object detection algorithms combine camera images with LiDAR point cloud data to improve object detection performance. In addition, objects in the 3-D LiDAR point cloud can be precisely labelled to generate 3-D bounding box labels that can be easily applied to 2-D images from one or more cameras, to train neural networks for object recognition. This requires both intrinsic and extrinsic calibration of the camera with respect to the LiDAR.

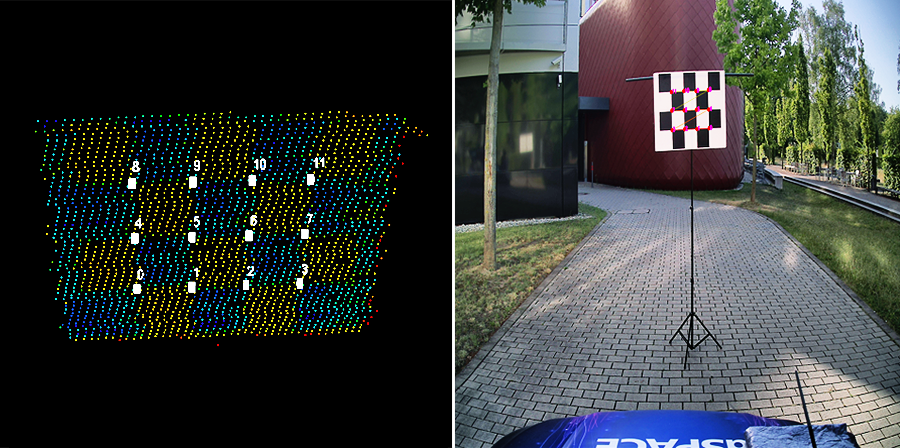

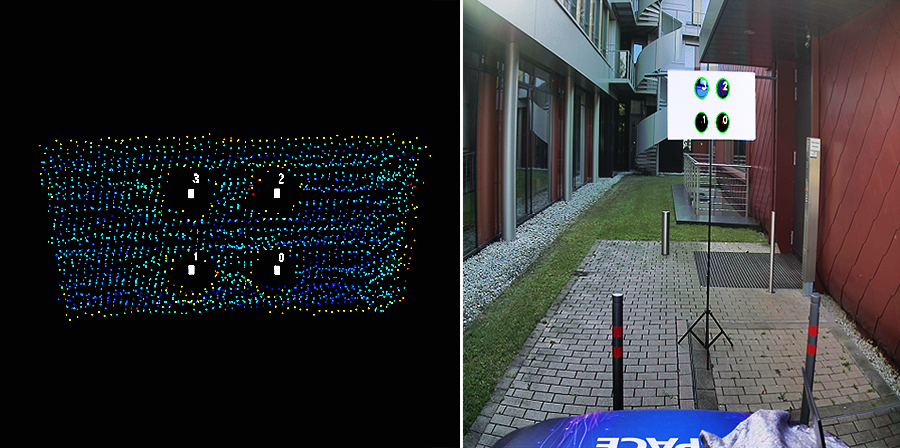

Key-point detection with checkerboard pattern: For extrinsic calibration, the key-points must be detected in both the camera images (right) and the LiDAR point cloud data (left). The quality of the calibration depends on the accuracy of the keypoint detection in both sensor data. This is the classical method.

How to Calibrate the Sensors

The most common example of sensor calibration for autonomous driving applications is the calibration of the camera and the LiDAR sensor. First and foremost, intrinsic calibration of the camera must be performed. The classic method for performing intrinsic camera calibration is to use a planar checkerboard target to compute the camera matrix and distortion parameters. The key points or corners of the checkerboard are detected in the image. Then, the parameters are computed by comparing the detected key points in the image with the known actual key points on the checkerboard pattern. To improve the accuracy and robustness of the calibration, certain heuristic approaches are used to ensure that the key-point detections are acquired with different target positions and enough samples per target position. The calculated intrinsic parameters are also used for the extrinsic calibration process.

For the extrinsic calibration, the key-points must be detected in both the camera images and the LiDAR point cloud data. Since the amount of LiDAR point cloud data is sparse compared to the amount of image data, the detection of key points on the calibration targets becomes difficult. The quality of calibration depends on the accuracy of key-point detection in both sensor data. To investigate the calibration accuracy of the sensor setup, multiple calibration targets can be used to measure the calibration performance. The classic method is to use a planar checkerboard target for key-point detection. However, new state-of-the-art methods propose the use of a 3-D target with circular holes, which is also suitable for the detection of key points in the presence of sparse point clouds.

Heuristics ensure that only accurate key-point detections are retained to obtain a robust calibration. The detected key points in the camera coordinate system are compared to the corresponding key points in the LiDAR coordinate system to compute the extrinsic calibration parameters that define the translation and rotation of the coordinate systems relative to each other. Both the intrinsic and extrinsic calibration algorithms built into the RTMaps software record live key-point detections with high quality and evaluate the performance of the calibration algorithm.

Thanks to the high-resolution LiDAR, both methods achieve high accuracy with a reprojection error in the range of 1 ± 0.1 pixel for the extrinsic calibration. The checkerboard pattern-based calibration converges faster than the 3-D target-based calibration for the sensor setup because the checkerboard pattern used contains three times more key points than the 3-D target. The calculated calibration parameters can be used to merge the RGB data of the image with the LiDAR point cloud data or the depth information of the LiDAR point cloud data with the image.

Key-point detection with 3-D target: This is the new modern method. It uses a 3-D target with circular holes, which is also suitable for key-point detection in sparse point clouds. The detected key-points in the camera coordinate system (right) are compared with the corresponding keypoints in the LiDAR coordinate system (left) to calculate the extrinsic calibration parameters.

Everything in sight

The video clearly shows how the dSPACE Sensor Vehicle's camera and LiDAR capture the environment in parallel.