Speeding Up the Development of ADAS Systems with Model-Based Development

|

Published: July 05, 2016 |

How do you speed up the development of electronic systems that are becoming ever more complex?

Kunal Patil, PhD, Sr. Applications Engineer, dSPACE Inc.

Advanced driver assistance systems (ADAS) have the potential to significantly reduce the number of road accidents by enhancing vehicle systems for safety. The ADAS market has developed from comfort systems designed for high speed highway driving to safety systems designed to operate in complex urban environments. This also leads to growing system complexity.

To reliably validate the growing complexity of control interventions in vehicle behavior with prototype vehicles alone has become impossible. To keep up with development efforts, despite increasing complexity, relevant tests must be brought into the lab at an earlier development stage to evaluate electronic control unit (ECU) interaction automatically.

Virtual test drives in the laboratory are the answer to this challenge. The model-based development of ECU software is increasingly being used in the automotive industry. Especially with driver assistance systems, this approach allows engineers to evaluate and validate functional concepts and requirements on a PC very early by means of model-in-the-loop (MIL) simulation and to reuse test libraries over the complete development process comprising software-in-the-loop (SIL) and hardware-in-the-loop (HIL) simulation.

For driver assistance systems, which interact with the steering, braking or throttle control, a detailed model of the vehicle and its dynamic behavior is essential, as well as models for simulating the road, the driver, environmental sensors and the surrounding traffic. Moving the setup and validation of test libraries, driving maneuvers, and plant models to the early development phases helps save precious HIL test time. The actual HIL tests can then be more and more restricted to the verification of test results generated during MIL or SIL simulation.

dSPACE Platforms for ADAS Development

The figure below provides an overview of the dSPACE platform for ADAS development. It features three pillars – rapid control prototyping, virtual validation and hardware-in-the-loop (HIL) simulation.

dSPACE rapid control prototyping (RCP) systems provide dedicated tools and a platform for ADAS applications. dSPACE RCP systems let you develop, optimize, and test new control strategies in a real environment quickly without manual programming. During control design, the prototype of ADAS has to be integrated into the vehicle like a real ECU, and communicate with the vehicle’s bus systems (such as CAN). MicroAutoBox II and AutoBox are compact prototyping solutions for executing computation-intensive embedded software and integrating it in a vehicle’s electrical system.

With dSPACE VEOS, virtual validation is made possible. VEOS is dSPACE software for the C-code-based simulation of virtual ECUs (V-ECUs) and environment models on a host PC. VEOS supports testing and validating ECU software in PC-based offline simulations in closed loop with environment models. With virtual validation technology you can prepare hardware-in-the-loop (HIL) tests and scenarios on a PC and you can also Use V-ECUs in HIL simulation if the ECU hardware prototype is not available yet.

The third platform, HIL simulation, will be explained in detail later in this blog.

How do you test the ADAS features in the lab?

The answer is state-of-the-art testing via hardware-in-the-loop, where appropriate plant models for the ego-vehicle, fellow vehicles, static objects and sensors emulate the environment. ADAS functions are often distributed across the electronic control unit (ECU) network, thus an end-to-end test is necessary to ensure the operational reliability of the system within the virtual vehicle. In addition to common component and integration tests on the hardware-in-the-loop (HIL) simulator that use dynamic plant models for sensors as well, integrating real sensors and actuators is especially important for ADAS applications.

HIL Testing is enabled by the use of real-time capable dynamic models. Modeling of dynamic behavior of physical and communication systems becomes fairly complex for ADAS development. The dependency of the ADAS features on the state of the vehicle and its environment, including traffic, road conditions, communication with vehicles and infrastructure, and signal interfaces − such as radar, lidar, GPS, and cameras − make it quite complex. Thanks to our ASM models, described below, engineers can recreate any realistic test scenarios in the lab.

What makes ASM special?

Automotive Simulations Models (ASM) from dSPACE offer dynamic plant models, including traffic simulation for ADAS development and testing. Automotive Simulation Models are open Simulink models for the real-time simulation of automotive applications. The modular setup of ASM makes it possible to combine different model libraries for vehicle variants and use cases. ASM models can run on the HIL simulator so that the various ADAS tests can be performed realistically.

The ASM Traffic Library can be used to cover the various ADAS use cases. ASM Traffic gives you realistic vehicle, sensor, traffic and environment simulations in real time. Adaptive cruise control (ACC), parking assistant, intersection assistant, traffic sign recognition, pedestrian recognition, predictive headlights, predictive cruise control and energy management , blind spot detection, lane departure warning , emergency brake assist are some of the use cases we have in mind when we develop our plant model.

High flexibility and ease of use of ASM Traffic models allow for defining of any kind of traffic scenario to ensure thorough testing of ADAS controllers. It supports the creation of complex road networks so that you can define sophisticated traffic maneuvers on the roads. The simulated environment can consist of static and movable objects, like traffic signs and pedestrians. Various sensor models and user-definable sensors are available to detect these objects. To test pre-crash functionalities, you can define traffic scenarios that, in real life, could result in an accident, and observe system behavior under challenging conditions. Traffic scenarios can be modified and immediately simulated without having to generate code again.

Automotive Simulation Models and packages for driver assistance systems.

How to create traffic scenarios with ASM?

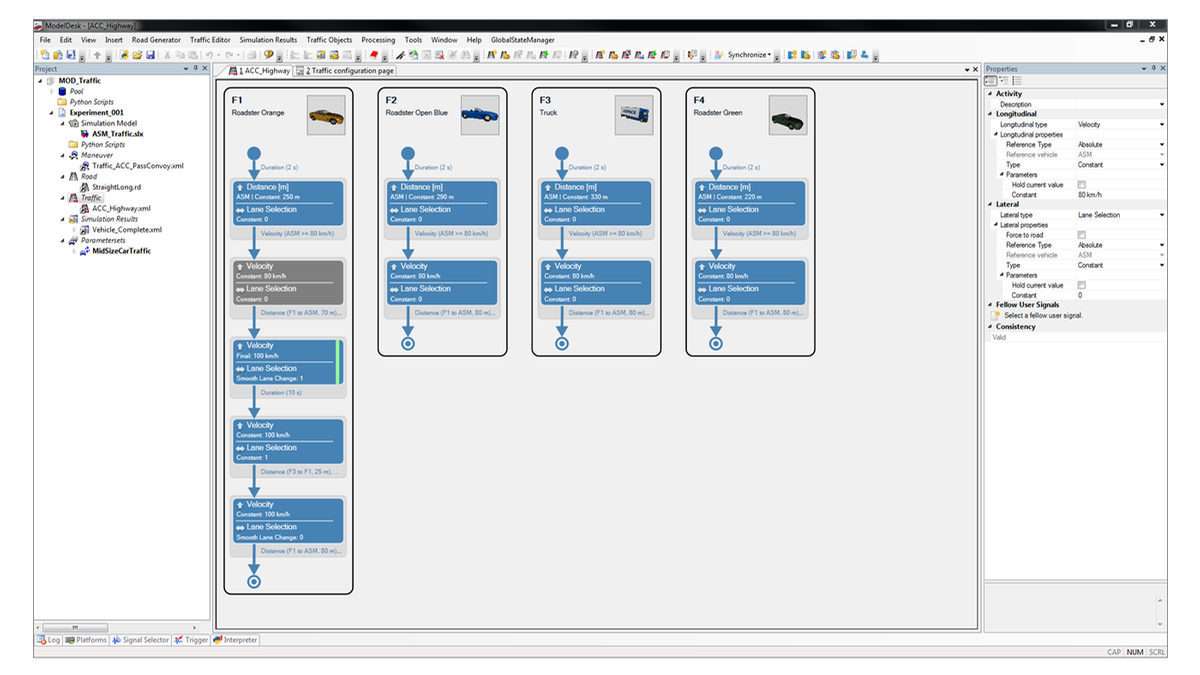

A traffic scenario primarily defines where and how fellow vehicles and objects around the ego-vehicle move. ASM Traffic supports traffic scenarios with one test vehicle and a multitude of independent fellow vehicles, all of which can perform any desired actions like lane changes, speed changes, crossing traffic, oncoming traffic, etc.

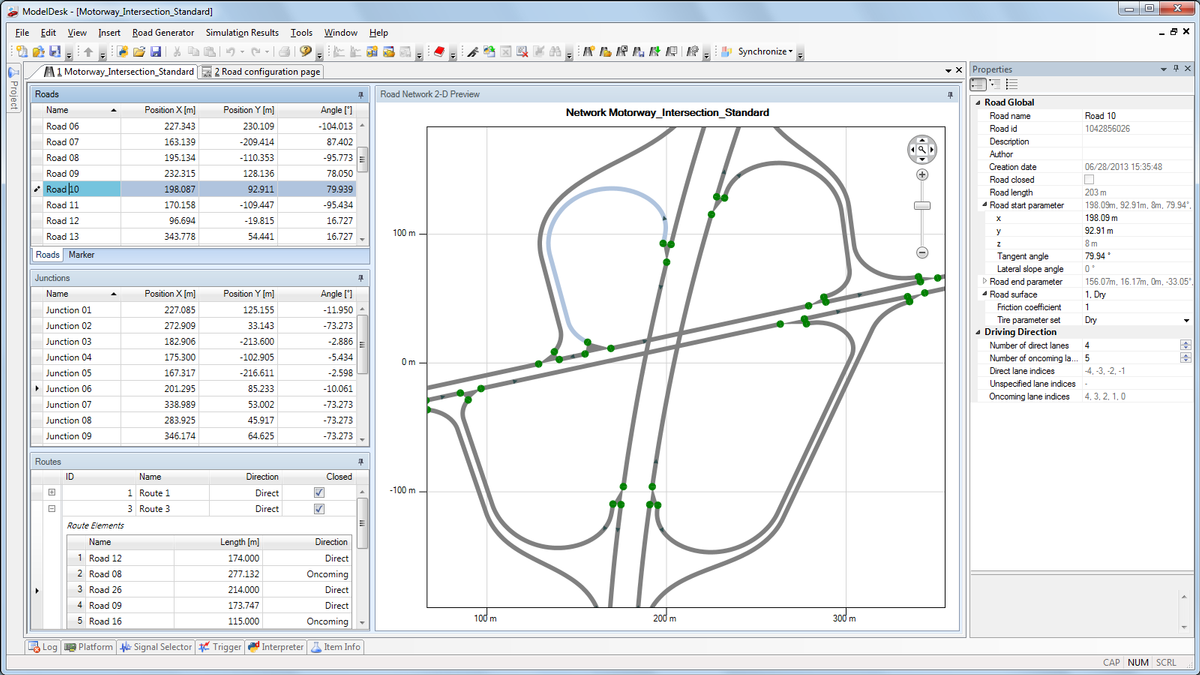

You can create traffic scenarios using the ModelDesk dedicated graphical user interface. The ModelDesk Road Generator is used for creating road networks and sophisticated road features. A virtual road can be constructed manually from geometric segments, or complete road networks can be imported from map data, such as OpenDrive, Open CRG, GPS, ADAS RP, Google Earth, and OpenStreetMap. The road design also interacts closely with the 3-D animation software MotionDesk to define the environment.

The movements of the fellow vehicles on a road network are defined in the graphical user interface of the ModelDesk Traffic Editor. Traffic scenarios simulated with ASM Traffic can be visualized by real-time 3-D animation in MotionDesk. ModelDesk and MotionDesk work hand-in-hand. Updates to ModelDesk can immediately be viewed in MotionDesk.

ModelDesk lets you define scenes for the automatic generation of 3-D sceneries in MotionDesk. For example, you can define country roads, tree-lined roads, and urban areas, and completely parameterize them: with a road embankment or border area, tree spacing, reflector posts, street lamps, building types, and so on. Fine adjustments can be made afterwards in MotionDesk and its integrated Scene Editor.

How to integrate real sensors and actuators?

One focus of the ADAS test can be end-to-end testing, i.e., from the first sensor in the signal chain up to the last actuator that executes an action. For example, camera-based driver assistance systems such as lane departure warning and lane keeping assist systems. Instead of validating the overall system on the road by driving thousands of test kilometers, the majority of associated tests can be done in the laboratory by means of HIL simulation and virtual test drives.

The 3-D visualization tool, MotionDesk, generates synthetic camera images of the road and traffic ahead of the ego vehicle. These images are displayed on a screen, which is monitored by the image processing ECU with the integrated camera sensor. For the ECU to interpret these images as a real traffic environment, the focus of the camera has to be adapted to the actual distance to the screen, and the generated images have to match the perspective of the front camera in the real vehicle. For this, settings like the position and orientation of the associated virtual camera are typically defined in the 3-D visualization tool.

In addition, the actual image generation by the graphics card has to be synchronized with the frame clock of the camera sensor, and the 3-D visualization software on the PC has to produce images with a fairly constant frame rate, for example, of 60 Hz. This way, the image processing ECU is stimulated in a real-time, closed-loop simulation, and the detected shapes and positions of lane markers are forwarded to the lane departure warning and lane keeping assist algorithms.

In contrast to the camera, the radar sensor cannot directly be used in a HIL setup for the detection of traffic objects. Instead, a suitable sensor model is required to simulate the characteristics of the real radar sensor. The Automotive Simulation Models feature a generic sensor model which can be adapted specifically to this use case. The sensor calculates the nearest point to each road user in the 3-D space and outputs the associated distance, relative velocity, relative acceleration and relative horizontal angle. Thus, the object data normally output by the real radar sensor is calculated during the virtual test drive.

To validate the behavior of the overall system, the object data emulated by the radar sensor model is directly fed into the ECU via a dedicated high-speed, low-latency interface. If the radar ECU is not directly integrated in the HIL test setup, information about detected objects can be provided to other ECUs via the CAN or FlexRay bus as residual bus simulation, also called restbus simulation.

HIL setup for testing radar- and camera- based active safety systems.

Using the tool chain from dSPACE presented here, the same models, tools and tests can be used continuously for PC offline and real-time HIL simulation. It allows the assessment and validation of different driver assistance systems during MIL, SIL and HIL simulation. Dedicated models and tools are available especially for testing radar- and camera-based applications, which are increasingly common in modern production vehicles.

- Automotive Simulation Models Tool suite for simulating the engine, vehicle dynamics, electrical system, and traffic environment

- MotionDesk 3-D online animation of simulated mechanical systems in real time, e.g., for visualizing ADAS or vehicle dynamics scenarios

- MotionDesk End-of-life announcement The end-of-life for MotionDesk is planned for December 2024

Drive innovation forward. Always on the pulse of technology development.

Subscribe to our expert knowledge. Learn from our successful project examples. Keep up to date on simulation and validation. Subscribe to/manage dSPACE direct and aerospace & defense now.